Let The Training Begin!

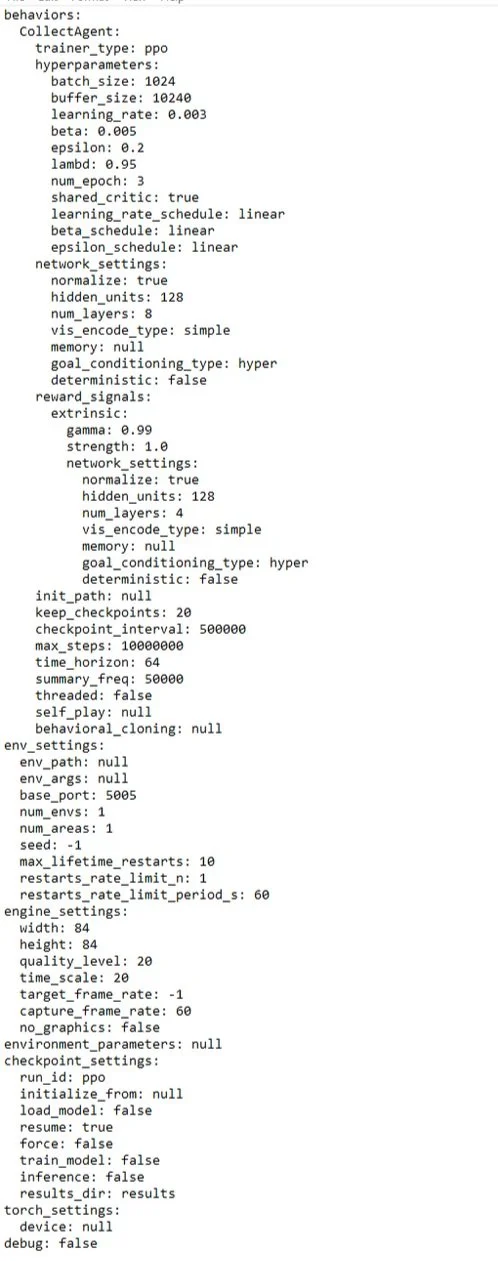

Now that we've done all the work behind creating our environment and setting up our agent, it's time to train. Before we dive in, we need to set up our hyperparameters. With Unity and the ML-Agents package, we configure these settings in a config.yaml file, which looks something like this:

Let's focus on a few key parameters:

trainer_type,learning_rate,hidden_units,num_layers,max_steps.

Trainer Type

The trainer_type is crucial because it determines the algorithm our agent will use for training. Currently, we have it set to Proximal Policy Optimization (PPO), which is well-suited for continuous action spaces. While there are other algorithms we will experiment with later, we’re sticking with PPO for this training session.

Learning Rate

The learning_rate controls how much the agent's model adjusts with each step. It’s a balance; too high, and the model might not converge, too low, and it might take forever to learn.

Network Size

hidden_units and num_layers define the size and complexity of the neural network. hidden_units is the number of neurons per layer, while num_layers is the number of layers in the network. Increasing these parameters impacts the model's ability to learn complex behaviors while also increasing its computational costs.

Training Duration

Finally, max_steps sets the training duration. I usually set this quite high since I let the agents train while I'm AFK or asleep, ensuring they don’t stop until I explicitly stop them.

We'll adjust these parameters as needed to optimize our agent's performance. Now, with everything set, let's begin the training!